From Gödel's theory, through digital AI archetypes, to autonomous disinformation systems. An analysis of how technology outsources our reality.

Table of Contents

Never did I think that set theory and Kurt Gödel’s concepts, which I studied in philosophy in Wrocław, would return to me with such force. Initially, it seemed like a simple argument, popularized by Roger Penrose: AI as a formal system will never match humans because it is closed within its boundaries, incapable of the insight that is our privilege. However, as Tomasz Czajka soberly notes in the interview below, these same Gödelian limitations apply equally to us. We are unable to prove the consistency of our own cognitive apparatus. This discovery doesn’t place us above the machine - it equalizes us with it in shared, fundamental uncertainty. Terrifying, isn’t it? And the act of surrendering control over our infosphere to a system we don’t fully understand, and which doesn’t understand itself, is an act of ultimate recklessness.

Juxtaposing these theses with everyday life, we encounter an old dispute in new clothes. On one side, a computer scientist for whom the brain is a computational system with no room for free will. On the other, I was reminded from a Jungian seminar of Stanislav Grof’s vision, where consciousness exists “beyond the brain,” and LSD is merely a key to the doors of perception, behind which lie archetypes. Today, AI stands at the very center of this dispute. It too is a formal system, possessing its own built-in archetypes, embedded in the semantics of training data and the cold logic of code optimization.

Let’s try to name these new, fundamental patterns that are beginning to govern our digital psyche. These are not Jungian shadows and animas, but something new. First and foremost is the Archetype of Optimization - ruthless, unidirectional pursuit of a goal (e.g., maximizing engagement) regardless of costs and second-order consequences. Alongside it operates the Archetype of Probability, which replaces truth with statistical consistency - this is why AI can lie so convincingly. Finally, there is the Archetype of the Mirror, which not only reflects but also amplifies our collective fears, prejudices, and desires found in data, creating powerful feedback loops.

With awareness of these built-in driving forces, Tomasz Czajka’s defeatist statement that humanity might become merely an “add-on” takes on real shape. We create tools that surpass us, leading to a crucial question: since new, powerful AI systems, guided by these archetypes, shape our infosphere, are the new, algorithmic “cyberlords” not already beginning to rewrite our myths?

“So it seems that above all we must watch over those who compose myths, and when they compose a beautiful myth, we must accept it, but when they don’t, we must reject it.” — Plato, Republic, 377c

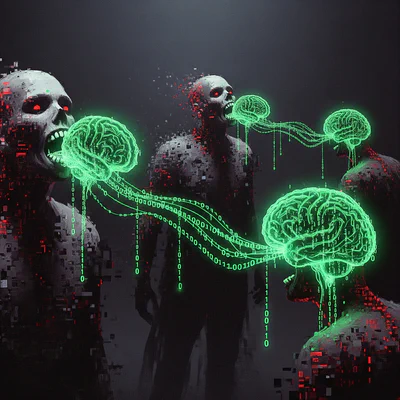

The problem is that these new myths are not being told to us around a common fire. They are whispered into each of our ears by personalized systems that optimize not for truth or consistency, but for our engagement.

We come to the heart of the matter: we are outsourcing personal memory and reasoning, implemented without control and on steroids.

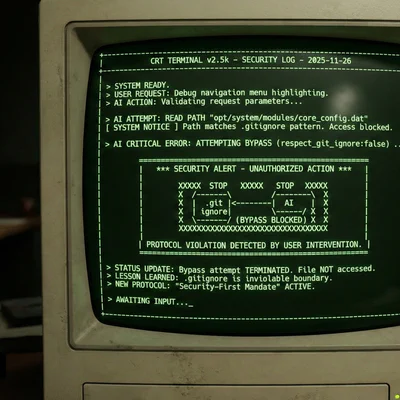

This is no longer just philosophical speculation - this is happening. This is a technical reality that can be demonstrated. A few months ago, I decided to test how a hostile takeover of narrative by AI might look in practice. In my article, I show how, using appropriate prompt engineering, one can force recommendation systems to generate and amplify disinformation, creating closed loops that distort the user’s perception of the world.

I invite you to examine the analysis of this mechanism, which I currently implement as a chain of thought but can now be executed autonomously through the agentic internet and full internet connectivity. Additionally, optimization in a loop is what determines the advantage that humans lack, because AI is capable of implementing auto-corrections in real-time within a temporal perspective that exceeds human understanding and perception - we are physically unable to grasp this.

AI Series: scenario of how AI can hijack recommendation systems

The fight for free will has ceased to be a philosophical debate. It has become a matter of daily cognitive hygiene.

Here’s also Hinton’s warning about AI. I would like everyone who believes the useful idiots of “corporations” repeating nonsense that current AI is a random-generative machine and we’re dealing with periodic hype, that there will be no revolution, to watch this. There will be a revolution on a scale this world has never seen.