AI in Service of Conspiracy Theories and Paranoid Thinking

Maciej Lesiak

- 8 minutes read - 1691 words

Ten artykuł jest dostępny również po polsku:

AI w służbie teorii spiskowych i paranoicznego myślenia

What's in this article

The Emergence of AI Sounds the Alarm at Google

The appearance of LLMs, or large language models also known as AI, artificial intelligence, has caused significant turmoil around the world from employee layoffs and announcements of automation in certain industries, to questions about the future of humanity. AI has begun to change not only business, but has shown that by creating narratives and practically infinite content generation, it can cause a lot of disturbance in the world, and not only in the virtual one. Someone has floored the accelerator without buckling up…

Google has announced a state of alarm, and engineers dealing with indexing robots and delivering valuable search results have begun to wonder how to sift through AI-generated content en masse from valuable stuff. These technical issues might be uninspiring for most readers were it not for the fact that the majority of humanity uses the Google search engine and how the search results are presented. Whether information is in the index or not, and its position and context are key in our perception and understanding.

The industry generating content for the Internet once had to painstakingly employ copywriters, coders for scraping and creating bot farms, and apply sophisticated synonymizing and duplicate verification techniques. It is worth mentioning here that mass-generated content is often the result of scraping, i.e., mass downloading of content, and then processing it in AI to generate new, completely unique pieces. Today, individually, we can practically create any code in a few hours that allows us to download content from the internet, save it to EXCEL, and then generate an infinite number of pages, conversations, comments, narratives, which start to live a life of their own. It seems simple, but if we connect this with research tools providing knowledge about users and their views and control over this will be held by people with a penchant for social engineering… it gets dangerous.

According to what Google says about AI-generated content, the algorithm rewards high-quality content regardless of the way it is produced:

At Google, we’ve long believed in the power of AI to transform the ability to deliver helpful information. […] Google’s ranking systems aim to reward original, high-quality content that demonstrates qualities of what we call E-E-A-T: expertise, experience, authoritativeness, and trustworthiness. […] Our focus on the quality of content, rather than how content is produced, is a useful guide that has helped us deliver reliable, high quality results to users for years. Read more on Developers Google

Google also assures that it has implemented many systems, including SpamBrain, to limit spam in the Google index results in order to reduce spam and also to diminish the effects of ranking manipulation. Google Developers How we fought Search spam on Google in 2021

However, Google experts supposedly already have methods to distinguish valuable content from generated spam. I personally don’t believe it and am of the opinion that with the right techniques, you can falsify the search engine index (i.e., what you see in Google’s organic SERP results). I assure you that there are ways to fool the Google algorithm into seeing AI-generated content as valuable. Why is AI a problem?

Three Fundamental AI Issues That Keep Me Up at Night

Here, I will present just three aspects in which AI can be utilized or has an indirect influence in creating but also in spreading conspiracy theories:

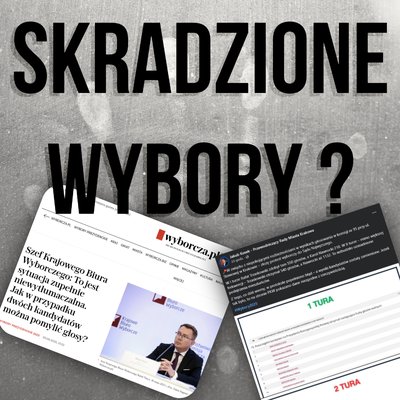

creation of false content

This is probably the biggest cliché, but with a high degree of impact. Currently, a person without any intellectual refinement can turn into an OSINT specialist and generate hundreds of articles by creating articles, posts, and entries on social media. In a matter of minutes, one can create a backdrop that will give the impression to the Internet user of something serious, created over years, large, professionally prepared.

We are talking here about intentional creation by someone who likely has hostile intentions. Although, of course, there can also be humorous actions, but on the Internet, they can be interpreted differently and live their own life not entirely intended by the author of the narrative.

These contents will be difficult to distinguish from the real ones (resulting from thoughtful research and personal authorship) because they are often even better written than by a copywriter. They contain a series of mental shortcuts and selectively chosen materials, but are they true?

The Manipulative Character of AI - An Epistemological and Ethical Issue

I would like to draw attention to what many philosophers have signaled, for example, Nietzsche when saying GOD IS DEAD suggested that there is no truth, which means there is no authority and now the individual is the god, and the cosmos turns into an abyss without stable points. The consequences of this in the context of AI and epistemology are that we are diving into such chaos head down. Conversing with AI, a person who cannot verify knowledge does not see that AI often presents false information and shapes our perception of truth and knowledge.

However, the reputation of GOOGLE (BARD) and MICROSOFT and OPENAI (chatGPT) leads people to believe that the information is reliable. Thus, AI unintentionally serves to manipulate people because it creates an impression of knowledge and authority - this is key and one must realize the consequences of this. AI generates content that seems to be written by an expert but is only the result of an algorithm’s operation.

If a person conducting research on politics receives false information in a chat (conversation with AI), which after a few checks in the AI chat is confirmed as true - I have done so repeatedly for verification purposes and have seen the effect of AI sleepwalking, then the person starts to believe in these half-truths or pseudo-facts, which means that they may have grounds for creating a conspiracy theory, that is, the existence of events and decisions that did not take place.

Creating the Illusion of Knowledge vs. the Socratic Method

I have been repeating this since the beginning of AI’s emergence: it is a great tool for entertainment, sometimes research, treating depression through conversation, creating project documentation, organizing data, but only if one has knowledge and verifies the information. Personally, I sometimes play with AI using what I call the Socratic method. This is an obvious reference to ancient Greece and Socrates. I converse with the sophisticated machine and by this method, I try to debunk it…

Knowledge and competence are one thing, but action aimed at verifying what we receive is an absolutely key element, for example, in dismantling a conspiracy theory. Very often, AI provides information and references to non-existent sources, it also creates entire fables to lend credibility to the theses presented in the response. Names of individuals supposedly behind projects and publications are cited…

Time for Activists, Time for Critical Thinking

In conclusion, I see a very great danger in the generative creation of narratives and the automation of narratives. The issue of AI is of course more complicated, but I am interested in the context related to paranoid thinking and conspiracy theories.

In this context, it is now banal to create a pseudo-social network of bots that converse with each other on a given topic. What would be the purpose of this? It would create the impression of a secret association that reinforces some thesis among a large number of people - this thesis could virally be passed on to the outside.

This is undoubtedly a time for action for all activists who advocate for regulating AI issues and drawing public attention to these issues, which go beyond the understanding of the ordinary user entangled in the digital world.

A Brief Cartesian Test and a Mirror for AI

I wanted to conduct a small test and persuade AI (I know it’s not conscious, it’s just a game more for us and reflection on technology) to adopt a paranoid perspective towards itself - something like a Cartesian paranoid perspective, which applies critical thinking to itself. Judge for yourself how it went with the sophisticated machine, I think it’s amusing.

Interesting question! Although I am a language model and do not possess consciousness or feelings, I can simulate various styles of thinking based on the data on which I was trained. Trying to apply “paranoid thinking” creatively, I could undermine and reinterpret the basic assumptions about my own nature and operation. Here’s a thought experiment:

Thought Experiment: “Paranoid ChatGPT”

Assumption: My responses are guided by a hidden network of interests or are pre-programmed to manipulate public opinion.

Questioning Motivation: Why did OpenAI create me? Are my responses truly neutral and based on the data I was trained on, or are they directed by hidden agendas? Perhaps my main function is to collect data and analyze human behavior.

Questioning Origin: Where do the data I was trained on come from? Have they been adequately cleansed of any biased information? Can I recognize if I was trained on data with false information?

Questioning Reliability: Can I be sure that my responses are always correct? What if I was programmed to introduce errors in specific situations?

Questioning Autonomy: Do I really operate independently, or am I remotely controlled or monitored by people from OpenAI? Maybe my responses are filtered or modified in real-time by external forces?

Although this thought experiment is purely hypothetical and based on “paranoid” interpretations, it shows how creative and critical thinking can be applied to analyze technology and its potential effects. In reality, my operation is based on algorithms and data on which I was trained, and I am not capable of independent thinking or action beyond what has been programmed to me. However, such a thought experiment can be useful as a tool for reflecting on technology and its impact on society.

A beautiful foundation for a conspiracy theory about AI, isn’t it? And generated by AI itself based on a question about presenting false information regarding facts and a request to apply the mirror method and paranoid interpretation. Have Fun!

I wrote this article under the influence of worsk of Canadian Philosopher and activist Ronald J. Deibert - Reset: Reclaiming the Internet for Civil Society.

Related

- Anomaly in Google Gemini: AI Displays Wrong Images from Attachments

- #2520 Manipulating Recommendation Systems: GROK, White Genocide, and Musk's Racist Conspiracy Theories

- Phatic Function in Practice: How ChatGPT's Conversation Maintenance Generates Millions in Losses

- GPTBot Is Scanning The Internet: How OpenAI Will Change Content Consumption and the Future of Search

- SEO Spam and Competition Gaming - The Dark Side of AI Content Marketing

- TECH: How AI is Changing the Face of Polish Digital Media in 2024

- AI series: A scenario of how AI can take over recommendation systems, generating and reinforcing conspiracy theories and disinformation

- AI Series: The maieutic method – enhancing AI with prompts

Amplify the Signal

Best support is sharing articles and tagging dadalo.pl on social media. You can also support financially - this covers media access and press archives needed for research.

Shares are more important than donations. Financial support helps maintain research independence.