Patent attorney Aleksandra Maciejewicz in the TOK FM podcast systematizes key legal concepts regarding AI creativity. From the black box problem, through the concurrence of claims in deepfakes, to practical risks for every user of generative artificial intelligence.

Table of Contents

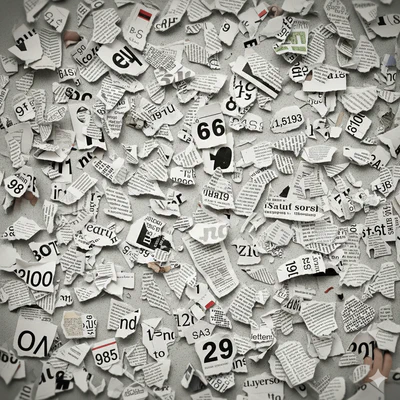

A few months ago, I was listening to a DSA conference in Łódź, from which I drew the conclusion that lawyers do not fully understand what the Internet is—that it is a process, a bubbling chaos. Today, in the context of AI development and the proliferation of LLM/AI models, I try to follow the discussion regarding copyright issues. AI models are present in every industry, and their creations are used to gain a competitive advantage. It is obvious that anyone seriously involved in adapting AI to business asks themselves what real copyrights we have to what is the product of our conversation or session. Materials made available by companies creating models suggest that the author of the output is the person prompting, i.e., introducing the input. I admit that for many months I naively deluded myself with these platitudes.

I invite readers to enter a more nuanced world of legal definitions and issues explained in the Gazeta Wyborcza podcast hosted by Sebastian Ogórek by Aleksandra Maciejewicz—co-founder of the LAWMORE law firm, a patent attorney specializing in new technology law and intellectual property commercialization. In my opinion, the lawyer conducted an exhaustive review of the legal status at the intersection of artificial intelligence and copyright. The conversation goes beyond typical journalistic simplifications and introduces concepts that every creator and AI user should know. I will add that over a year ago I spoke with Anna Mroczek, who is the owner of an animation studio creating Oscar-winning works, and in the discussion, she presented arguments proving the complicated issue of using AI in creative work. Of course, these are issues that plagiarists, whom we deal with en masse due to AI, do not think about.

Prompt is an idea, and ideas are not protected

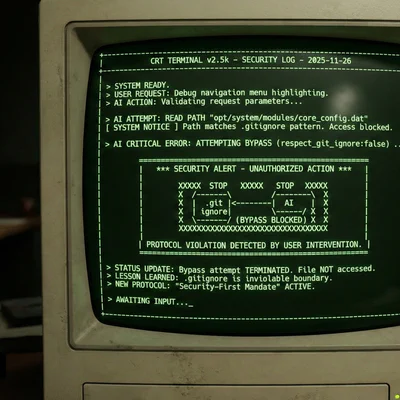

One of the most important distinctions Maciejewicz introduces in the conversation is the separation between the prompt (the command given to the AI model) and the output (what the model generates). Under copyright law, a prompt is treated as an idea, a concept, a method of operation… and these, according to the act, are not subject to copyright protection. Please read this again because companies selling you AI/LLM subscriptions prove otherwise. So even if your prompt is an elaborate essay, it is protected solely as a literary work, not as a source of rights to what AI produces based on it.

Going further. This distinction, if you understand its formal-logical value, has fundamental practical consequences. A person who types even very detailed instructions into Midjourney, DALL-E, or ChatGPT does not automatically become the author of the generated material within the meaning of copyright law. Maciejewicz points to the existence of the so-called black box inside the AI model, the operation of which means that completely different outputs can arise from the same prompt. Since the user does not fully control how their command will be processed, it is difficult to speak of the creator’s “personal stamp” on the created work. Please read this paragraph two or three times.

The Jason Allen Case — 624 Prompts Are Not Enough

The lawyer cites the famous and well-known decision of the US Copyright Office regarding the image “Théâtre D’opéra Spatial” by Jason Allen. Allen used the Midjourney system, entering at least 624 iterations of prompts to achieve the desired visual effect. He then edited the image in Adobe Photoshop and upscaled it using the Gigapixel AI tool.

Despite this extensive iterative work, the US Copyright Office refused to register the copyright in September 2023. The justification was unequivocal: “traditional elements of authorship”—meaning concept and execution—were generated by technology, not by a human. The Office decided that Midjourney does not interpret prompts as direct instructions for creating a specific artistic expression but converts them into tokens compared with training data, and the starting point is random “visual noise, like snow on a TV screen” (Midjourney documentation cited in the decision). This is probably hard to understand for the talking heads on YouTube who generate hundreds of hours of AI content as allegedly their own creations.

However, what is particularly interesting, the Office compared Allen’s situation to the case of Kelley v. Chicago Park District, in which the court denied copyright to the creator of a “living garden.” And in my opinion, this analogy is accurate: just as a garden “owes most of its form and appearance to the forces of nature,” so an image generated by Midjourney owes its final shape to the model’s training data and the random seed of the diffusion process. And not, as big tech tries to sell you, to the user’s prompt. Allen appealed this decision to the federal court in Colorado, and the case is still ongoing.

In January 2025, the US Copyright Office issued an additional report confirming its position: art generated by AI based on a text prompt cannot be protected by copyright. Even the fact that you use advanced and long, targeted instructions and multiple iterations does not protect you. At the same time, the Office allowed the possibility that prompts themselves, if sufficiently creative, may be subject to protection as literary works. So, dear Ladies and Gentlemen, save your AI chat sessions, because they are protected in their entirety with their artifacts, not just the artifacts themselves.

“While ‘prompts may reflect a user’s mental conception or idea, … they do not control the way that idea is expressed’ and the ‘user lacks control over the conversion of their ideas into fixed expression.’” U.S. Copyright Office: AI Prompts Alone Provide Insufficient Control Over Expression to Protect AI-Generated Content

However, the most important criterion that, according to Maciejewicz, emerges from case law is: the decisive factor HERE is decision-making regarding the final form of the output. The analogy she presents, and I must sadly admit it speaks to me, constitutes an old ruling regarding a photographer’s assistant. Although the assistant operated the camera, the copyright was granted to the photographer who “directed” the creative process with sufficient precision. With AI, this analogy is problematic, however, because the black box introduces an element of randomness comparable to Jackson Pollock’s action painting technique, where the artist also did not control exactly how the drops of paint would fall.

Stephen Thaler and DABUS — The Paradox of Honesty

The second pillar of the conversation is the patent saga of Stephen Thaler and his AI system named DABUS (Device for the Autonomous Bootstrapping of Unified Sentience). Thaler created an autonomous AI system capable of independently generating inventions and has been leading a legal campaign in many jurisdictions for years, seeking to have DABUS recognized as an inventor in patent applications. Clever, right? Such a money-making machine.

However, the balance of this gold rush is unambiguous: Thaler lost practically everywhere. In the USA (Federal Circuit, confirmed by the refusal of certiorari by the Supreme Court in 2023), in the UK (UK Supreme Court, December 2023), in Australia, Germany, before the European Patent Office, in Israel, and New Zealand. He achieved his only success in South Africa, but the patent system there does not provide for substantive verification of applications; meeting formal requirements is sufficient. Maciejewicz said on TOK FM:

The Thaler Paradox: Courts explicitly indicated that Thaler lost due to his honesty. If he had listed himself as the inventor instead of DABUS, the patent applications would likely have gone through. The German Federal Patent Court even allowed such an amendment. Thaler could have listed himself as the inventor “who used the artificial intelligence DABUS to generate the invention”—and this version was accepted. The law de facto encourages silence about the role of AI in the inventive process.

And in my opinion, this is one of the sharpest conclusions emerging from the conversation and obviously concerns not only patents. The US Copyright Office decision in the Allen case sends an identical signal: if Allen hadn’t revealed Midjourney’s role, his work could have been registered without obstacles. The system rewards non-transparency. So, by using artifacts, you simply say that you are their creator yourself.

I hasten to explain that copyright law (protects creative expression) and patent law (protects technical inventions) are two distinct legal regimes. Copyright asks “who actually created it?” and answers, broadly speaking: not you, because black box. Patent law asks “who is listed as the inventor?” and answers: anyone as long as it’s a natural person, and if you list a machine, we reject it for formal reasons. I would like this to be clear to you, because a patent is one thing, and copyright is another. The effect is the same, however, that both systems lead to the same cynical conclusion: keep quiet about AI.

Voice Theft: The Polish Precedent of Jarosław Łukomski

The conversation in the podcast also touches on the problem of deepfakes and the protection of auditory image. Maciejewicz discusses a case that is surely known to all AI specialists reading this article, which has aroused immense interest in Poland since the end of 2025. It is, of course, about the case of voiceover artist Jarosław Łukomski.

Łukomski is one of the most recognizable Polish voiceover artists, known for voiceovers in films such as “The Silence of the Lambs” or “Goodfellas”. I listened to an interview with him, and one day he discovered that his voice had been cloned using AI and used in an advertisement for JFC Polska, a manufacturer of waste tanks. Łukomski said he never consented to this, knew nothing about the ad, and received no remuneration.

The case went to the Intellectual Property Department of the Regional Court in Warsaw as the first lawsuit in Poland for digital voice theft. At the hearing in January 2026, the court appointed an expert in phonoscopy to assess the degree of similarity of the voice in the advertisement to Łukomski’s voice. The voice actor’s attorneys are demanding 50,000 PLN in compensation and over 100,000 PLN for unjust enrichment.

Here, let’s focus please, because the situation gets complicated, and Maciejewicz points out that in such cases we are dealing with a concurrence of at least three legal regimes: protection of personal rights (civil code—voice as an element of image), GDPR (voice as biometric data enabling identification of a person), and potentially criminal provisions (insult, defamation in the case of compromising context). Importantly, even if the company did not directly use a sample of the voice actor’s voice but led to the creation of a voice “confusingly similar” to the original, a violation of personal rights may occur. Analogous to cases involving unfair competition, where it is enough for the consumer to have an association with a specific person. These arguments appeal to me.

Strict Liability and the Trap of Social Media

One of the most important warnings Maciejewicz formulates in the conversation concerns strict liability in copyright law. It means that the perpetrator’s intent does not matter, i.e., it does not count whether the goal was to reach millions of recipients or just to send a joking recording to a friend.

Maciejewicz explains that there is an exemption for personal (private) use—covering family and friendship relations. However, the moment material hits a social networking site, the situation changes dramatically. Even if the intention was to share with a narrow circle of friends, the content can become viral, and liability for copyright infringement rests on the person who shared the material. Social media platforms bear joint responsibility only from the moment they obtain information about the illegality of the content.

When Copyright Fails, or What Other Protection Tools We Have

From what I understand from the conversation with Maciejewicz, copyright is only one of several intellectual property protection regimes and, interestingly, not necessarily the most important in the context of AI. When copyright protection is not applicable (because there is no human creator), alternative legal paths remain:

| Legal Regime | What does it protect in the context of AI? | Example of application |

|---|---|---|

| Personal rights (Civil Code) | Voice, image, surname | Voice deepfake of a voice actor |

| Unfair competition | Investment, reputation, workload | Copying an artist’s style and brand |

| Patent law | Technical solutions | Algorithms with a “further technical effect” |

| GDPR | Biometric data | Unauthorized use of a voice sample |

| Know-how protection | Methodology, processes | Prompting and post-production pipeline |

| Database protection | Datasets | Training sets and curated datasets |

So, what is most interesting to me is the emerging key conclusion: copyright is not the only nor even the most important path. “Parasitism” on someone else’s reputation, copying signs, unjust enrichment—each of these mechanisms can provide protection where copyright answers “no”. Formally, it all looks solid, but we are entering the shaky ground of technical issues, and after attending the DSA conference in Łódź, I have doubts about lawyers (based on expert knowledge of specialists) understanding what the internet is.

Where Are Regulations Heading?

Maciejewicz identifies three parallel regulatory directions that will shape the legal future of AI:

First, the AI Act and Digital Omnibus in the EU, which, as we know, introduce a transparency obligation in the declarative sphere: a summary of model training data (facilitating claims for unauthorized training) and mandatory metadata indicating that content was generated by AI. How it is in reality, each reader can judge for themselves.

Second, EPO guidelines (European Patent Office), which from what I understand are updated annually, and these guidelines show a tendency to relax the approach to patenting inventions involving AI. Yes, this is the desired deregulation. The key here is the concept of “further technical effect,” meaning generally computer programs as such cannot be patented, but if they lead to concrete technical effects, patent protection becomes possible. There is, therefore, a wide field here for appropriately packaging technology into formal frames to secure it.

Third—and according to Maciejewicz most likely first—the lobby of collective management organizations (CMOs, such as ZAiKS) will strive to introduce license fees or a mechanism similar to the reprographic levy for using works to train AI models. This direction is closest to existing market mechanisms and easiest to implement. And this is the core of the misunderstanding of what position creators are in when colliding with Big Tech, and what we see in the context of the hypocrisy of defending journalism described by me by the CEO of wp.pl in the context of the allegedly unfair division of the advertising cake by Google. It’s not about copyright, truth be told, it’s just about money.

Conclusions: The Law Doesn’t Keep Up, But Is It Helpless?

One of the most interesting TOK FM podcasts featuring Aleksandra Maciejewicz I’ve heard recently about AI shows that although the law does not yet have dedicated regulations for AI creativity, it is probably not completely helpless. Potentially, instruments exist from personal rights, through GDPR, acts of unfair competition, to patent law, and purely theoretically, they create a protective net that already allows pursuing claims in many scenarios. In my subjective assessment, courts analyzing cases from the formal side, just as in the case of DSA, are really losing control over rapidly changing technology. There is also a considerable disproportion between the potential of a single injured person in a collision with, for example, a corporation. Does anyone believe that, for example, parts of recommendation algorithms or generative procedures, or training data, will be revealed?

Fundamental tensions are revealed here, which legal systems around the world are probably facing, which can simply be deduced from press publications reflecting the state of the debate: how to define authorship in the era of generative AI? Are 624 prompts enough to consider a human a creator? Where does the tool end and the autonomous “creator” begin? And finally: should honest indication of AI’s role in the creative process deprive protection, as shown by the Stephen Thaler paradox? And DSA requires such an indication…

I hope I have interested readers in this topic. From several years of working with LLM/AI models and conversations, for example, with people from the creative industry, it is clear that answers to these questions will be formulated gradually. By courts, patent offices, and regulators, as well as simply by everyday life. The Łukomski case in Warsaw may become one of the milestones on this path.

The podcast by Gazeta Wyborcza is available at audycje.tokfm.pl.

Aleksandra Maciejewicz is a co-founder of the LAWMORE law firm, specializing in servicing startups and the creative industry, and the founder of ONDARE legal/business hub.

Copyright Office Issues Report on Copyrightability of AI Content

Allen v. Perlmutter (1:24-cv-02665) District Court, D. Colorado