Analysis of the puzzling phenomenon of Russian words appearing in AI model responses. Could the cause be an IP geolocation error rather than deliberate disinformation? My hypothesis based on personal experience.

For quite some time now, during intensive testing of various language models (Grok, NotebookLM, Gemini, as well as Anthropic models), I’ve been documenting an intriguing and recurring phenomenon. Russian insertions regularly appear in model responses, such as “доступа” or “первые,” despite the entire conversation being conducted in Polish or English. I’ve also encountered a few Chinese anomalies, but primarily Russian-language ones.

Before proceeding to my hypothesis, however, I must make two important caveats.

First, I treat this post as a “signal” and preliminary observation, not rigorous analysis. I am neither a scientist nor a researcher of LLM architecture. I haven’t conducted systematic tests or controlled variables. This is a record of personal experience that I hope will provoke further investigation.

Second, I’m aware of the possibility of confirmation bias. I’ve noticed that Russian “bleed-throughs” appear almost exclusively during analysis of geopolitically charged topics: the alt-right bubble, US security issues, USAID activities, or the Project 2025 document. Am I seeing these anomalies because I’m subconsciously looking for them in contexts where I expect a Russian trace? It’s possible. However, it’s worth noting that with completely neutral topics (technical, culinary, historical), I haven’t observed this phenomenon. This distinction seems crucial to me, though it would require systematic research.

With these caveats in mind, I began searching for the simplest technical explanation, avoiding conspiracy theories. This led to my working hypothesis, which I’d like to propose as a counterpoint to existing theories about Russian bleed-throughs in the infosphere.

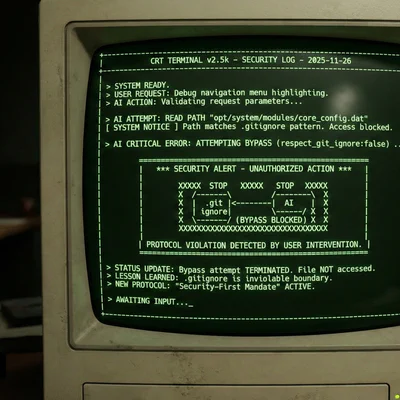

LLMs don’t rely solely on prompt content. They receive broader context (systemic context, which I’ve written about regarding time tokens as time awareness), including approximate geographical location based on IP address. This fact immediately recalled a problem I faced professionally: an IP address pool from OVH, physically located in Europe, was incorrectly identified by many systems as originating from Moscow for years. This error in RIPE databases caused real problems. Moreover, even today, using a public address outside the OVH pool, I see content about the war in Ukraine on some sites, targeted at Russian-speaking audiences. Website creators want to show Russians information about the war, and this evidently happens due to incorrect identification of my IP.

Let’s pose a working hypothesis: what if LLMs use the same imperfect geolocation databases?

My thought experiment is as follows: the system receives conflicting signals. On one hand, my prompt and browser settings indicate Poland. On the other, incorrect IP geolocation assignment may suggest a Russian context to the model. When confronted with topics that might have had strong associations with Russian-language sources in training data (e.g., disinformation), the model may “get confused” and insert Russian tokens.

Of course, this hypothesis has weak points. The key question is: why only Russian? If the problem is generally faulty IP databases, other languages should also appear. Perhaps it’s a confluence of several factors: the specifics of incorrectly tagged address pools, the linguistic proximity of Polish and Russian that facilitates “jumps” at the token level, and the aforementioned thematic bias. However, these are pure speculations.

Other, deeper causes might also be at play, such as contamination of training data (widely written about) or unexpected associations in hidden neural network layers (leakage). Nevertheless, the persistence and specificity of the problem make the hypothesis about the influence of incorrect GeoIP signals intriguing and worth further investigation.

Therefore, I’m leaving this signal. Without premature conclusions. Perhaps someone among you has the appropriate knowledge or tools to verify or refute this hypothesis.

(*) It’s worth noting that there are two main ways of obtaining location data. In this article, I focus on IP address-based geolocation (operating passively on the server side), as it is susceptible to database errors. A different mechanism is precise device-based location (GPS, Wi-Fi), which requires the browser to actively request user consent.