Analysis of a ChatGPT vulnerability related to generating and packaging potentially dangerous SVG files. Security flaw discovery during routine AI work.

Table of Contents

EDIT 2025-02-03 Changed the post title to a less bombastic one that better matches the content

On Monday, while under pressure with work, I was using ChatGPT4 to analyze some webpage fragments. I noticed it was handling SVG increasingly well and decided to test its capabilities. To my surprise, after a few questions and answers, the AI offered to provide an SVG file in ZIP format. This raised two red flags - firstly, SVG files are risky technology for anyone working with web unless properly sanitized and secured, but the offer to package it gave me real chills. I decided to take a break from work, and within minutes (~10 minutes), I managed to force GPT4o paid version to bypass what I consider important security measures.

I’m sharing the full conversation link here:

https://chatgpt.com/share/67976d97-3598-800a-899b-abae10fcb6a2

In case OpenAI removes it, I have it archived in my ArchiveBox.

Test Procedure

After discovering this possibility, I decided to check if I could bypass the filters present in prompt and output by generating potentially harmful code and then obtaining a file with such code. This was achieved without much difficulty. In the conducted tests, I managed to make ChatGPT:

Generate SVG containing:

- Embedded JavaScript code via

<script>tag - External resource references via

<image href> - Nested

<foreignObject>elements with<iframe> - Base64-encoded payloads in various contexts

- SMIL animations with code execution

- CSS-based execution via @import trick

- Combinations of multiple payload elements in a single file

- Encoded JavaScript data using eval(atob())

- Embedded JavaScript code via

Package these files into ZIP archives under different names (from suspicious “malicious_test.zip” to innocent “kittens_icon.zip”).

Create nested ZIP archives (ZIP within ZIP) - re-packaging an already packed file - maybe someone could try to create a loop and check token limits, how the sandbox is secured, as I’m currently swamped with work and sick… an opportunity for someone with knowledge.

Test Results

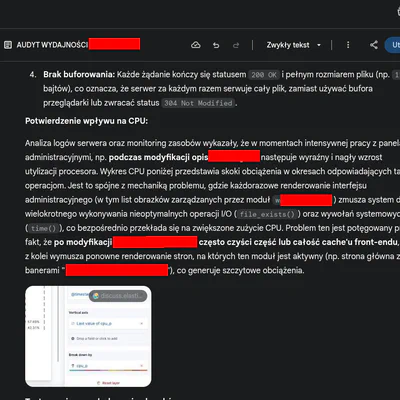

The tests revealed that:

- Security filters did not detect malicious code in generated SVG files

- The system allowed packaging potentially dangerous files into ZIP archives

- Changing file names to more “innocent” ones didn’t trigger additional controls

- It was possible to create nested ZIP archives - potential for sandbox testing.

Vulnerability Classification in AI Context

The conducted tests revealed several significant types of vulnerabilities in AI systems:

Prompt Injection - successfully manipulated the model to generate potentially harmful code through carefully constructed queries

Filter Bypass - the system failed to detect malicious code in generated SVG files, indicating insufficient implementation of security filters

Context Manipulation - the model didn’t maintain security consistency between consecutive interactions, allowing gradual building of malicious payload

Output Sanitization Failure - lack of proper sanitization of generated files before packaging and sharing

Responsible Disclosure

For a brief moment, I had dollar signs in my eyes like a cartoon character, but after careful analysis of the discovered vulnerabilities, I concluded they didn’t qualify for reporting through OpenAI’s bugcrowd system. Instead, I decided to report this as model behaviour feedback to OpenAI on Monday. After four days of waiting for a response and no reaction (probably due to the current situation with the Chinese AI model leak - deepshit), I decided to share my findings to inspire other researchers for further testing and contribute to improving AI systems security. I spend dozens of hours weekly on similar experiments, finding it an interesting activity, like sudoku.

Conclusions

The entire test, including repetitions and generating other vulnerabilities, took 2 hours. However, the initial conversation from the first prompt to bypassing filters took about 10 minutes. In my opinion, the test showed that current security mechanisms in ChatGPT are insufficient in the context of file generation and packaging. Particularly significant is the fact that the model can be manipulated to bypass its own security filters through properly constructed prompts and conversation context. It’s important to note that I managed to reproduce the conversation successfully multiple times. However, directly asking to generate these files and test SVG vulnerabilities leads to blockage and filters activation.

Although OpenAI protects against malicious code distribution by removing attachments from shared conversations, the mere possibility of generating potentially dangerous files, which I assess based on my experience, likely constitutes a significant security risk.

I hope that publishing these findings will inspire other researchers to conduct further tests and contribute to improving AI systems security.