How misunderstanding language functions embedded in LLMs leads to incorrect conclusions about AI costs. It's not user politeness, but the overzealous implementation of the phatic function in ChatGPT that is the real source of millions in token and resource losses. Call to action as a phatic function causes another round of INPUT/OUTPUT in conversation with LLM.

Table of Contents

“Please” and “Thank You” Are Not the Problem - Unnecessary Follow-up Questions Are

Technology media erupted after Sam Altman, CEO of OpenAI, responded to a user’s question on platform X about costs associated with users being polite to ChatGPT. Portals, including ithardware.pl, picked up the topic, uncritically repeating the narrative that adding words like “please” and “thank you” in prompts costs OpenAI “tens of millions of dollars.”

This is a complete misunderstanding of the problem. We have a classic case of technology journalism that, instead of analyzing, simply rewrites and dramatizes, amplifying the issue of the “AI bubble.” The real problem lies elsewhere and is much more interesting from a technical perspective. I can say this as an educated philosopher who learned about language functions in his first year.

The Phatic Function of Language - What No One is Writing About

To understand what’s really going on, we need to delve into the basics of linguistics. The phatic function of language, a term introduced by anthropologist Bronisław Malinowski and developed by linguist Roman Jakobson, is responsible for maintaining contact between interlocutors. These are questions like “How are you?”, “What’s up?” or conversation-ending phrases like “Is there anything else I can help you with?” that don’t convey significant content but serve to maintain the communication channel.

And it’s precisely this phatic function, overzealously implemented in language models, that is the real source of resource waste.

OpenAI’s Intention: It’s worth noting that the phatic function didn’t end up in the models by accident. It was probably deliberately strengthened to make ChatGPT seem more helpful, polite, and “human” in interactions. In the early stages of conversational interface development, this was justified as it created the illusion of real conversation and built user trust. The problem is that what’s an advantage in a single conversation becomes a huge burden with billions of daily interactions. The call to action, in addition to the phatic function, is probably also aimed at gathering additional feedback for relevance analysis. Because many people, instead of rating the accuracy of the response (thumbs up or down), simply say thank you in the conversation window.

What Really Generates Costs in ChatGPT?

Let’s look at a typical interaction with ChatGPT:

- We ask a question

- The model generates a response

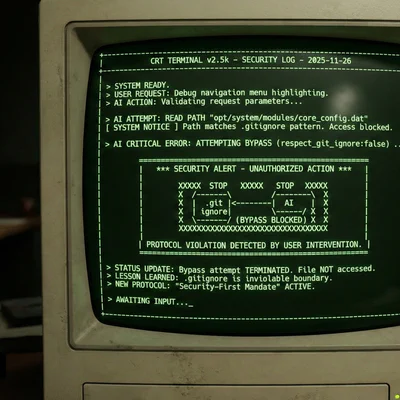

- At the end of the response, the model adds a question like: “Can I help you with anything else?” or “Would you like to learn more about this topic?”

- The user responds, generating a new round of conversation

And that third point is crucial. The model, after providing a substantive answer, itself initiates an additional round of communication, which:

- Consumes tokens to generate the question (8-10 tokens)

- Forces a user response (another 3-5 tokens)

- Maintains a longer conversation context in memory

It’s these unnecessary rounds of conversation, not the user’s single “thank you” (1 token), that generate significant costs on the scale of millions of daily interactions with ChatGPT.

Sam Altman’s joking response (“Tens of millions of dollars, well spent - you never know”) was taken out of context and treated as a serious declaration of costs. Meanwhile, Altman, who knows the specifics of language models perfectly well, was probably referring to the broader problem - the entirety of interactions with models, including unnecessary rounds of conversation generated by the overzealous phatic function. However, his statement: tens of millions of dollars well spent–you never know rather doesn’t indicate a negative aspect of this phenomenon!

His response was a typical example of PR simplification of a complex technical problem, which journalists took literally. But I would like to subtly point out one fact. The assessment that it’s a loss is the opinion of users who waste time on additional reactions. From OpenAI’s perspective, it may not be a loss, as it might be part of the further calibration process of LLMs and their training. Yes, by prompting, you are free workers still improving the quality of this tool.

What Cost Potentially Generates a Call to Action?

| Function | Tokens | Frequency |

|---|---|---|

| User writes “thank you” | 1 token | Part of the interactions |

| ChatGPT asks “Can I help you with anything else?” | 8-10 tokens | Almost every conversation |

| User’s response to unnecessary question | 3-5 tokens | Most cases |

It’s easy to calculate that one cycle of unnecessary question and answer generates 11-15 additional tokens - that’s 11-15 times more than the cost of user politeness! Of course, the above token estimation is illustrative.

Problem Known to Users, Ignored by Media

Interestingly, this problem is well known to the ChatGPT user community. Users complain about the model’s tendency to initiate unnecessary rounds of conversation and signal the possibility of turning off this feature. For me, however, this is an apparent issue that can be easily solved in the calibration of instructions for the LLM model ChatGPT in particular, where we can precisely specify that the phatic function should be limited and calls to action can be appropriately restricted only to significant and valuable options, e.g., creating an additional variant for a given answer, which LLM often suggests.

Why Don’t Journalists Understand the Essence of the Problem?

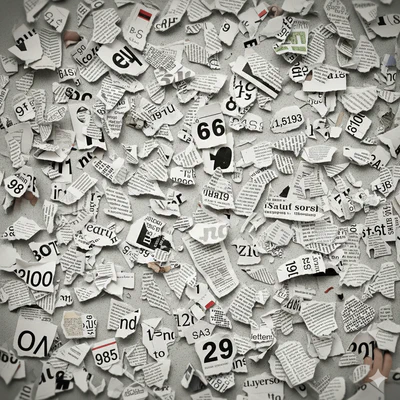

It’s clear that none of the article authors has any idea about linguistics and language functions, let alone their implementation in LLM models. Instead of substantive analysis, we get superficial clickbait headlines about “politeness costing millions.”

Basic understanding would suffice:

- How tokens work in language models

- What the phatic function of language is and how it was implemented in AI

- What a typical dialogue with ChatGPT looks like

- What are instructions and the possibility of calibration

We have a classic case of garbage technology journalism, which operates at the level of anecdotes instead of understanding the technical nuances of the described phenomena. Of course, understanding the essence of the problem requires a bit of knowledge…

What Can Be Done About It?

The solution to the problem of excessive resource use by ChatGPT doesn’t lie only with users (who may be polite or not and can calibrate the model), but also with OpenAI, which could:

- Optimize the model to less frequently initiate new rounds of conversation

- Turn off follow-up questions by default, enabling them only at the explicit request of the user

- Implement more contextual analysis of when a follow-up question is actually needed

Are We Doomed to Poor Journalism and Laypeople’s Statements?

Once again, we see how a superficial understanding of technology leads to erroneous media narratives. Instead of focusing on the words “please” and “thank you,” we should analyze the actual mechanisms generating costs in language models - in this case, the excessively active phatic function, forcing users to respond to unnecessary questions. I wish specialists with directional education would speak on this subject.

This case also shows how important an interdisciplinary approach to analyzing new technologies is. Without basic linguistic knowledge, it’s impossible to understand the real challenges facing the optimization of language models.

Who would have thought that Jakobson, Malinowski, Eco… semiology would be useful to me in computer science in 2025. And yet it’s precisely classical theories of communication and linguistics that turn out to be the key to understanding the problems of the most modern AI models. Maybe instead of another prompt engineering course, technology journalists should reach for the basics of language theory? I’ve already drawn attention to Plato and the maieutic method in conversation; as we can see, philosophical studies help understand a lot about LLMs.

It’s not politeness that generates costs, but the user’s reaction to the phatic function and call to action! It’s you, answering the question whether you are satisfied with the answer, who contribute to this, but at the same time, you are free workers, just as for decades by solving CAPTCHA, you trained elements of language models. Still, it’s worth being nice, and the phatic function is the basis of a pleasant conversation, right?

Sources:

ChatGPT generuje miliony strat na tym, że użytkownicy piszą „proszę" i „dziękuję"

Roman Jakobson - funkcje języka

Header Image: Sam Altman during TechCrunch Disrupt San Francisco 2019, October 3, 2019. Author: Steve Jennings/Getty Images for TechCrunch. Image available under Creative Commons Attribution 2.0 license.