How a fundamental error in a shop module created a system that sabotages itself, choking the server and blocking indexing in the AI era. A case study from the front lines of the battle for visibility. This is a humorous story full of plot twists where big money goes hand in hand with world-class professionalism.

Table of Contents

DISCLAIMER: There is no NDA in this matter and our client has given consent to publish fragments for educational purposes. However, the CEO of company XXXXX that developed the shop is not our partner, and I was never a subcontractor of such company. The presented logs and code fragments have been anonymized but preserve the original structure of the problem. In my opinion, the contractor’s name doesn’t matter because we encounter these types of errors in AI indexing context across all contractors and companies dealing with hosting applications, shops, and websites.

The years 2024 and 2025 are breakthrough periods for specialists handling applications and internet solutions. When it comes to AI search indexing, anomaly monitoring, and ensuring content is properly interpreted, we have a real “fever.” We implement actions at the application level, deploy rebranding in the AI era, but above all, we constantly monitor the indexing and reindexing process, which we achieve through building custom solutions. In this specific case, the client hired me as a “safety valve” to ensure that a renowned e-commerce shop contractor would deliver a finished product. I have no intention of harming anyone, so for business reasons, I’m anonymizing the contractor. Server, optimization, and DevOps were on our side. But primarily, problem analysis, which - as can be seen - the contractor itself didn’t fully understand, has dramatic consequences in the AI era.

How We Found Ourselves in This Mess

At the beginning of 2025, as I wrote in one of my articles, serious scanning of shops for AI-search began. On large implementations with hundreds of thousands of links and multidomain environments, this resulted in millions of URLs and dozens of simultaneous server entries, causing sudden spikes in resource utilization (CPU/IOPS), which forced not only optimization gymnastics and scaling but also searching for holes when it comes to poorly written applications. Today I describe a project that was supposed to be delivered by company XXXXX in the second half of last year, but as often happens with development miracles, the deadline was postponed multiple times until the production version went live in the second quarter of 2025 with great pain and tears.

Monitoring shops using Prometheus, Grafana, Uptime, and specific logs and our own solutions, we saw that from the beginning, the new application behaved, to put it mildly, far from stable for something new that was supposed to surpass this company’s previous product from a few years ago. We reported a series of concerns. Initially, we pointed out to the client that the contractor incorrectly handles the PHP framework, using overrides in places that are not safe and according to best practices - this caused bizarre requests to change file access policy through the NGINX service, inconsistent with security principles and common sense. However, the focus here is primarily on indexing and reputation in search results, so the client gave the green light to analyze problems in this area. Server logs, PHP debug, mysql slowlog, and several other proprietary solutions directed me to a series of serious errors, because for me, the server is always the bottleneck of indexing.

Let’s Create a DDoS with a Stupid Error and Block Indexing

Reading server signals and analyzing logs, I noticed a disturbing anomaly at many levels. But primarily, I focused on AI bots that fell into limbo, but also the indexing process in Google Search was not proceeding as it should. We constantly saw “grinding” of resources and repetitive calls to the ####_######## module authored by a renowned, not to say top, software house. In the report presented to the client, we wrote:

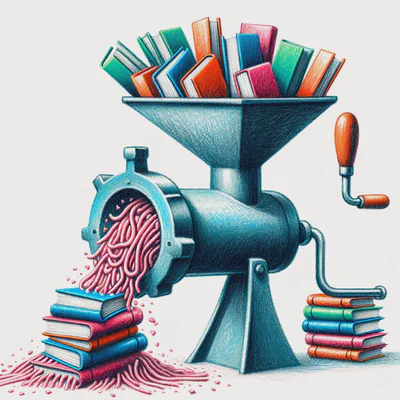

“Analyzing the logs, we detected a significant performance problem related to how URL addresses are generated for images and GIFs that are managed directly in the module. Improper implementation of the cache-busting mechanism results in complete lack of graphics caching on user browsers, leading to unnecessary server overload.”

In files modules/####_########/src/Entity/BoxImage.php and modules/####_########/src/Entity/BoxGif.php we found this fragment:

| |

When correlated with log anomalies and resource utilization, this is no longer an innocent cache-busting technique. It’s its caricature. Bots kept querying for the same resources because they were different with each query. Adding the timestamp time() to the URL means that every second generates a unique address for the same file. In the audit, we included this as follows:

“The error results from suboptimal and incorrect implementation of the cache-busting mechanism. (…) Web browsers treat each such URL as a new resource, completely preventing their caching.”

Worst of all, this “technique” was completely unnecessary. The system handled file names in such a way that they already contained a unique hash (e.g., ddaf4fab11387b0956cb83.jpg), which is a correct and sufficient versioning mechanism. Someone not only didn’t understand the cache mechanism of the system they were creating production solutions for heavy money in, but didn’t understand the solutions they were implementing and didn’t analyze their consequences, mindlessly burning dozens of work hours.

Cascade of Problems - Bottleneck Even Narrower

The faulty code led to a cascade of problems that paralyzed the shop on three key levels.

1. Burning Server Resources in AI Times

Every page refresh, both by the client and in the admin panel, forced the server to re-serve all graphics, ignoring cache. In the audit, we pointed to direct consequences:

“Increased number of I/O operations (disk reading) and system calls. Increased CPU utilization. (…) In the admin panel, where lists with many images are often rendered (e.g., 10 images managed by this module), this means 10 unnecessary

time()operations and 10file_exists()operations with each list load.”

2. Ruining SEO and Core Web Vitals

Lack of graphics caching is a direct path to Core Web Vitals disaster. The page loaded slower, directly impacting user experience (UX) and negatively affecting the LCP (Largest Contentful Paint) metric - a key Google ranking factor but also AI, which is hungry for data but valuable data. This is a direct path to lowering SEO reputation in Google and AI search.

3. Grinding AI Bots in Digital Purgatory (Limbo)

This is the most interesting and current aspect of the problem. Analysis of Nginx logs showed the sad truth. Bots including OpenAI, GPTBot/1.2, fell into an infinite loop, downloading the same image every second under a new URL address. This generated indexing loops, causing not only the bot to lower the page’s scoring but simply “overload” the shop with over half a million links. An interesting experience watching such a DDoS.

Fragment from server logs:

20.171.207.141 - - [11/Jun/2025:15:48:11 +0200] "GET

/modules/xxx_xxxxxxxx/img/d660c5fdf9db6e050e71145baa5288bd.jpg?

rand=1749440056 HTTP/2.0" 200 1290 "-" "Mozilla/5.0 AppleWebKit/537.36

(KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbot)"

20.171.207.141 - - [11/Jun/2025:15:48:12 +0200] "GET

/modules/xxx_xxxxxxxx/img/d660c5fdf9db6e050e71145baa5288bd.jpg?

rand=1749536867 HTTP/2.0" 200 1290 "-" "Mozilla/5.0 AppleWebKit/537.36

(KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbot)"

etc.

etc.

It seemed to me that simple log analysis, but also in case of problems with delivering an unstable application, some elementary obligation and decency would force thinking about what causes draining of indexing potential. The contractor’s CEO stated in conversation that there’s too much noise in the logs to read anything sensible from them (let me not comment on that). In the audit, we summarized this threat unambiguously:

“This results in inefficient use of ‘crawl budget’, multiple downloads of the same resources under different URLs, and lack of ability to maintain a stable index for a given view. As a result, this may negatively impact result reputation, suggesting the occurrence of resource duplicates.”

Absurdly Simple Solution to Critical Implementation Error

Fixing this critical error that generated dozens of work hours on the contractor’s side is trivial and takes literally 15 seconds. Although I note that this isn’t the only problem - we found much more at the MySQL query level. In this specific case, it was enough to remove the faulty code fragment.

BEFORE:

| |

AFTER:

| |

That’s it. After this change, it was only necessary to clear the shop’s cache and start reindexing anew. CPU utilization dropped significantly during AI bot visits.

Lack of Interest in Business Process Optimization

I prepared a detailed, 9-page technical report containing code analysis, evidence from logs, load charts, and precise repair instructions. The report was created at the client’s request because the renowned software house claimed that “it’s the server’s fault because it works for them.” The document reached my client and the CEO of the agency responsible for implementation. I proposed symbolic compensation for pointing out the error that functions across a number of shops of their clients. I gave them several days to respond.

The CEO was ultimately not interested. Despite finding such errors on other shops by this contractor, he decided the problem didn’t concern him because the fix had been implemented (which wasn’t true) and offered instead a payment of 1000 PLN for pointing out this error, which is an amount that probably adequately reflects their general code quality standards. Running a company for 15 years, I don’t remember such a situation… fortunately, the client who pays for monitoring the topic paid for supervision over the premium contractor. However, the problem definitely concerns customers using this store’s engine with custom modifications, as their visibility in the index is significantly reduced, which can be verified.

Think About AI Before AI Forgets About You

That’s why this case study reaches you. As a warning and lesson for the entire industry, because we now have a crucial moment and I assume that companies not handling such problems will also disappear.

The detected error teaches us several things:

Fundamentals are everything: Cache-busting is a powerful tool, but used without understanding, it becomes a weapon of mass destruction for performance. If you don’t understand something, don’t implement it.

The AI Search era is already here: Ignoring how AI bots index the web is a direct path to digital oblivion. Technical problems that once went unnoticed can now completely cut you out of results in ChatGPT, Claude, or Perplexity. This is especially significant for e-commerce. Today it’s difficult, even bad, but next year will be even more interesting. This is happening.

Culture of ignorance is expensive: Most shocking is the agency’s lack of reaction. Professionalism doesn’t consist of being infallible or avoiding responsibility, but in the ability to admit mistakes and fix them. Especially when the client pays for an audit that provides a ready solution on a silver platter. It’s evident that someone can’t calculate how many work hours they burned chasing their own tail when not delivering the shop or responding to client tickets describing an unstable application. I’m leaving this and moving on because I have work to do.

Oh, and one more thing. Cutting out AI bots, which renowned agencies and hosting companies are doing right now, is not a solution. It’s a short-term strategy with a sad ending for both sides. Moreover, many hosting providers and DevOps are now cutting out AI bots with a smile. In my opinion, this is unethical.

Companies that don’t adapt their processes and technical knowledge to the new reality where AI is the main information intermediary will simply disappear. And their clients will disappear with them.

… to be continued…?